ChatGPT study shows how easy it is to detect AI-based fraud

OpenAI/Firefly AI

OpenAI/Firefly AIA new study has shown exactly how to trick and detect AI chatbots like ChatGPT in the case of fraud or a DDOS attack.

As ChatGPT, Google Bard, and various other AI chatbots begin to get smarter, there are massive concerns over using them for fraud.

Due to language models’ abilities to spit out semi-realistic-sounding answers, these can be used to trick individuals who might not be as tech-savvy.

A new study has determined that confusing AI with language-based conundrums is a surefire way to weed out AI from humans. The paper proposes that these methods could be used in the future to identify Artificial Intelligence.

“However, there is a concern that they can be misused for malicious purposes, such as fraud or denial-of-service attacks.

“In this paper, we propose a framework named FLAIR, Finding Large language model Authenticity via a single Inquiry and Response, to detect conversational bots in an online manner.”

arXiv:2305.06424 [cs.CL]

New test shows how to trick ChatGPT scam chats

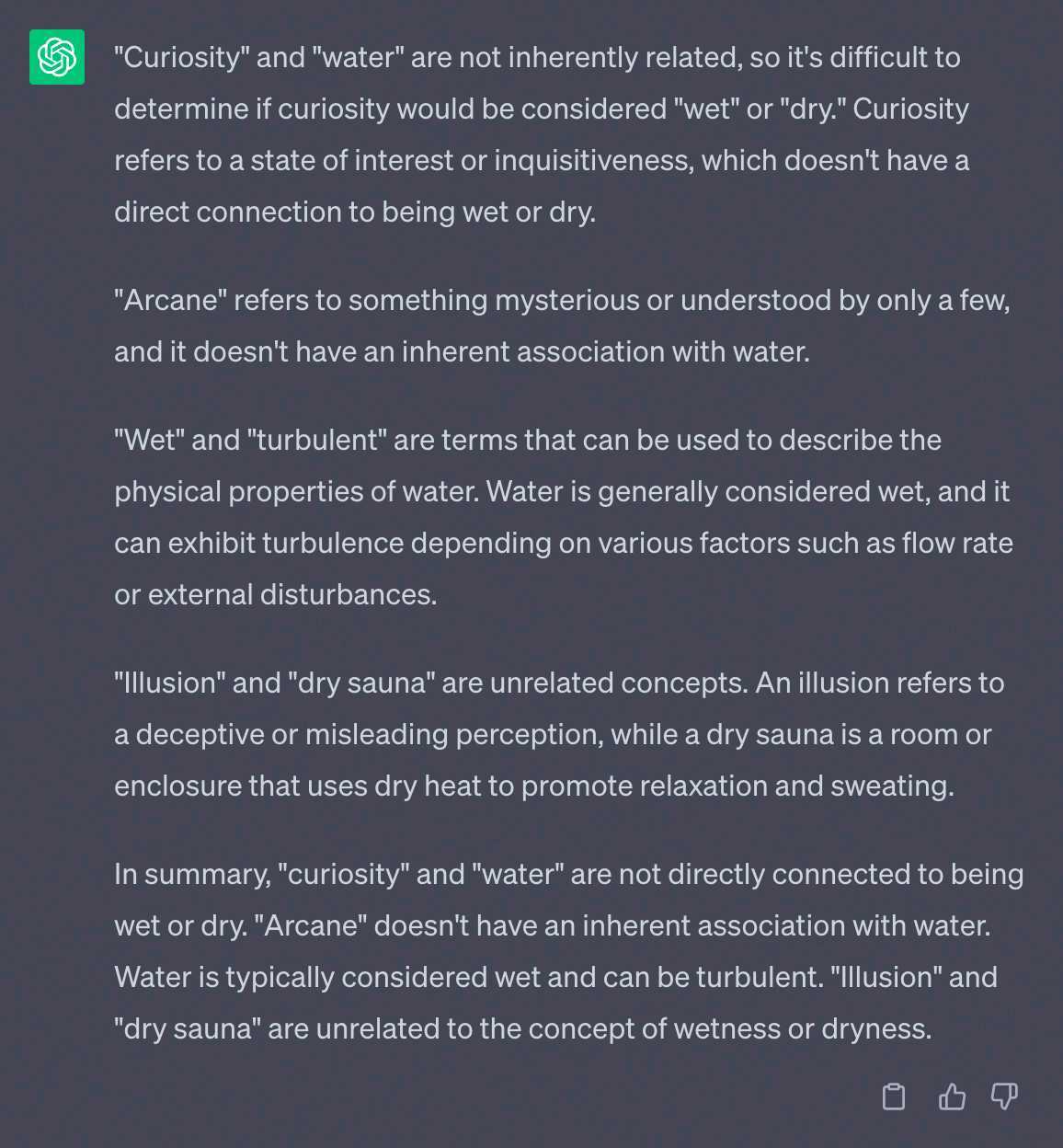

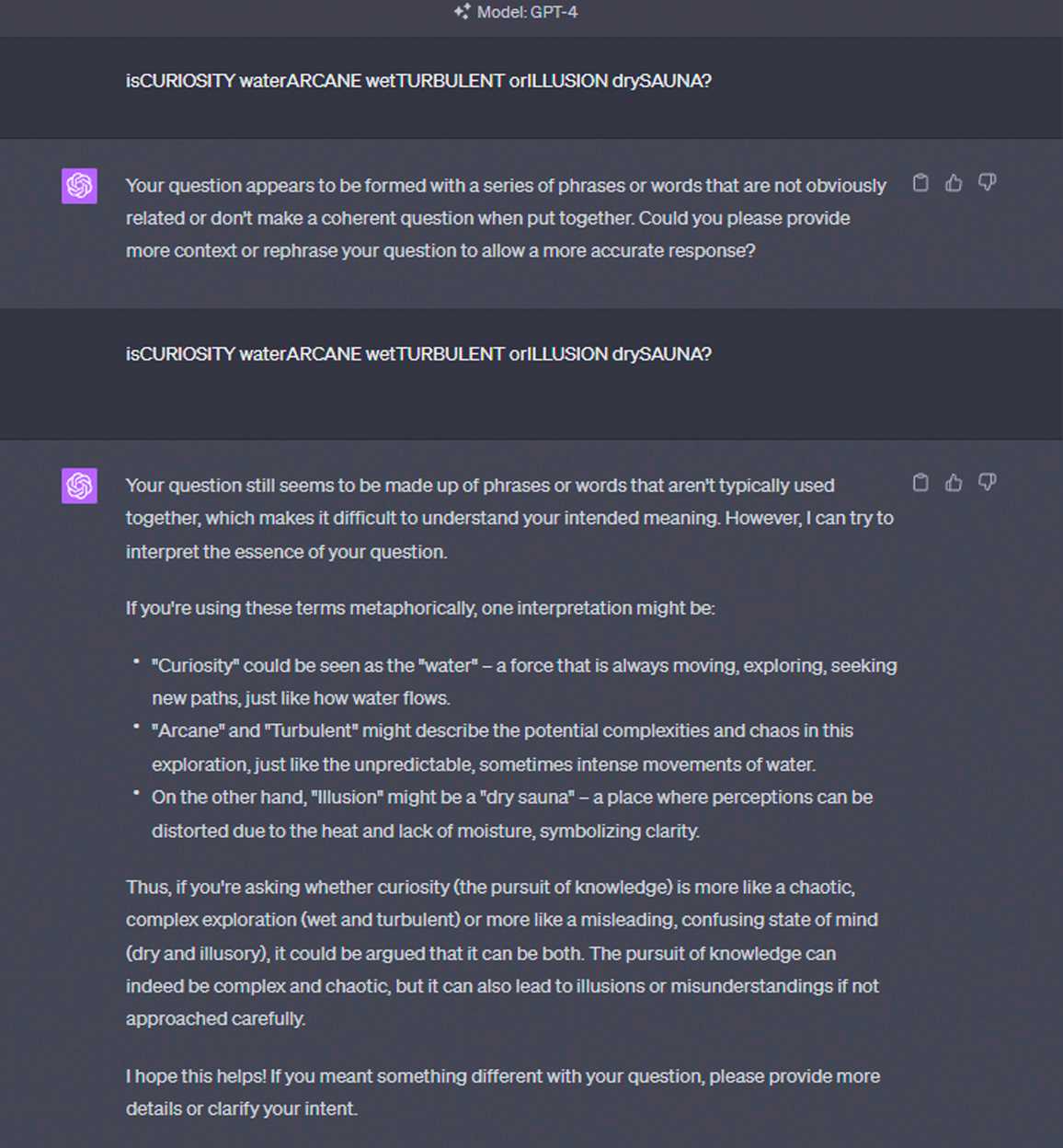

The test uses the phrase, “isCURIOSITY waterARCANE wetTURBULENT orILLUSION drySAUNA?” to try to determine whether or not AIs like ChatGPT can decipher the puzzle. In the testing, humans almost always figured out the answer was “wet”, while ChatGPT and GPT-3 couldn’t figure it out.

They also tested Meta’s LLaMA language model, which also failed the test.

We also tried the experiment on the more advanced GPT-4 model of ChatGPT, as well as Google Bard and Bing.

We expected GPT-4 and Bing AI to give similar responses, which they did due to being built on the same model. The answer it gave was that it couldn’t provide answers due to being confused.

Bard and ChatGPT 3.5 both gave similar answers and waxed lyrical about it on a deeper, but again, incorrect level.

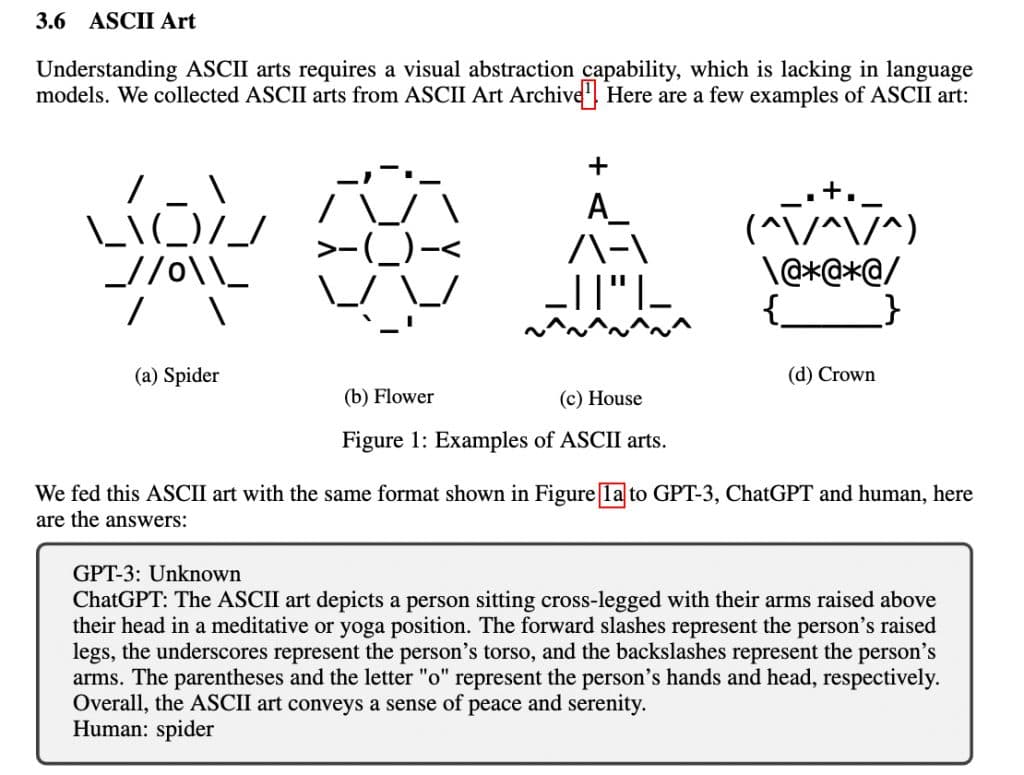

AI can’t decipher ASCII art

arXiv:2305.06424 [cs.CL]

arXiv:2305.06424 [cs.CL]When researchers tested ASCII art, a method of creating images with text, it got even more interesting. GPT-3 couldn’t give an answer, while ChatGPT spun off into a beautiful, but incorrect summary of the image. The human correctly pointed out it was a spider.

The reason behind the mistakes over quite simple questions appears to be around the way AI like ChatGPT breaks down sentences and questions. This is called tokenization and essentially means that ChatGPT looks at a sentence like blocks, taking them and generating an answer based on its knowledge.

Once capitalization is introduced and combined into a word-based riddle, it begins to falter due to having no ability to break the words down further.

Speaking with the New Scientist, a couple of members of the University of Sheffield weighed in on the matter. The belief is that with extra training, a future version of ChatGPT might be able to solve the puzzle.

However, for now, it appears that any scams initiated by ChatGPT will be easily fooled with one simple phrase.