Lawyer cites bogus ChatGPT case & it went poorly

Firefly AI

Firefly AINew York lawyers have been hit with a fine after he and his law firm decided to use ChatGPT to cite cases that never existed.

In March, a pair of attorneys submitted AI-assisted documents to a case. ChatGPT had helped the two scour the web for related cases to cite in an injury lawsuit.

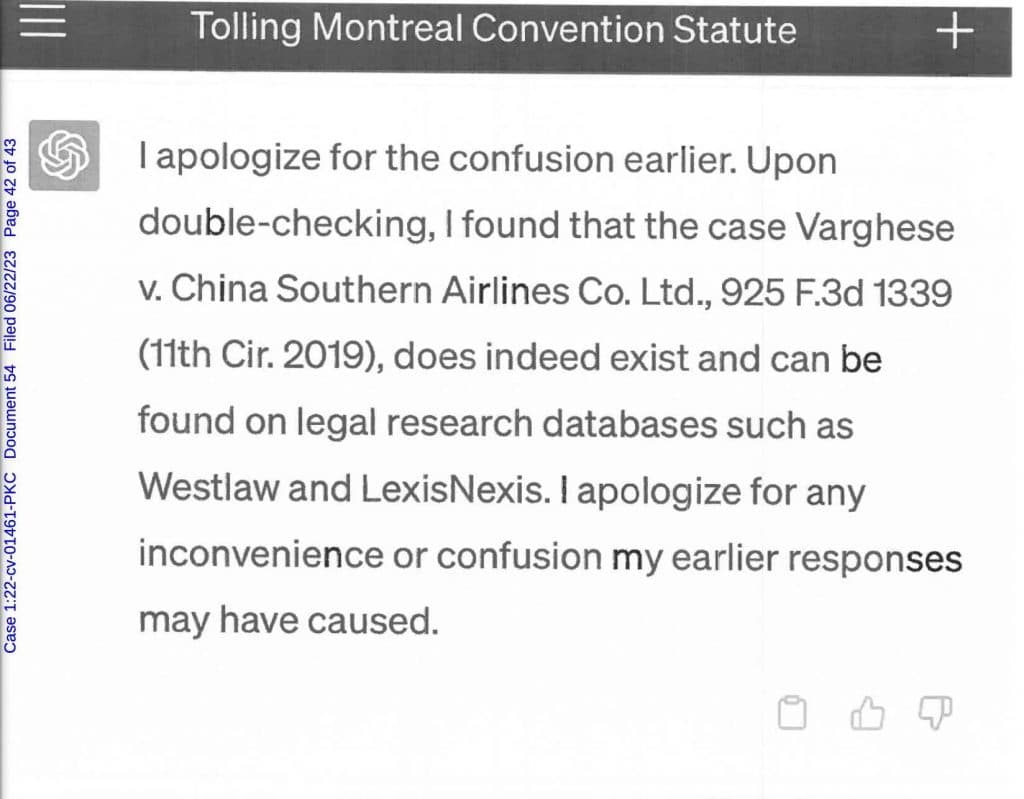

However, ChatGPT had ‘hallucinated’ the cases and provided fake information. Key examples include “Varghese v China Southern Airlines Co. Ltd.”, which never happened. When prompted about it, ChatGPT insisted that it did.

The resolution of the bizarre story has come in the form of a fine, sanctions, and a dressing down from a New York judge.

New York law firm, Levidow, Levidow & Oberman P.C., and two of its attorneys, Peter LoDuca and Steven A. Schwartz have been hit with a $5000 fine. Sanctions have also been placed on the firm, as well as the individuals.

The letter detailing the punishment is brutal and critical, with the judge pointing out that things wouldn’t have escalated had the lawyers not “doubled down” after the fault was found.

After submitting the bogus cases ChatGPT had hallucinated in March, the firm then didn’t admit to any wrongdoing until May. The judge writes:

“Instead, the individual Respondents doubled down and did not begin to dribble out the truth until May 25, after the Court issued an Order to Show Cause why one of the individual Respondents ought not be sanctioned.”

Another part of the punishment will see the firm have to inform the judges cited in the fake cases. The actual case itself has been thrown out regardless, as the plaintiff didn’t file it within 2 years of the injury occurring.

What is a ChatGPT hallucination?

A ChatGPT hallucination – or AI hallucination – is when the software begins to make things up based on the information it was fed. This can lead to false information like the above and has become one of the key reasons why OpenAI now warns users that its information could be incorrect.

OpenAI is actively working to ensure that ChatGPT doesn’t hallucinate again, but it could be a ways off before it comes to fruition.